In an era where digital manipulation has become increasingly sophisticated, a new menace looms on the horizon: deepfakes. These advanced synthetic media creations, powered by generative Artificial Intelligence (AI), are creating unprecedented challenges to our understanding of truth and trust. Academic studies reveal that the potential consequences of such technology on society are profound, with both benefits and risks.

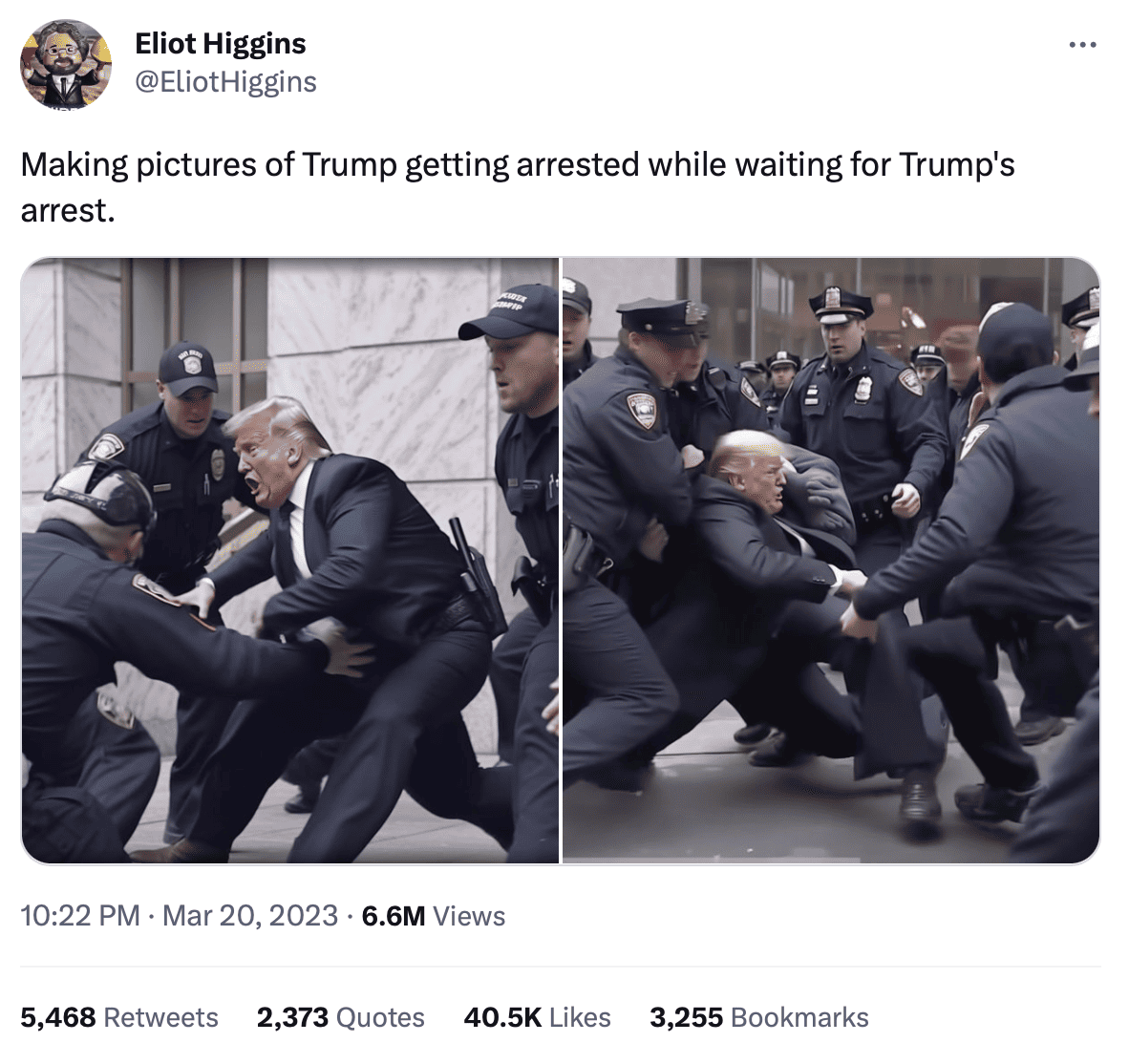

Recent synthetic images of Donald Trump being arrested have raised questions about our ability to distinguish between AI-generated media and authentic photographs. More worrying, a variety of deepfake-generating apps and websites now allow anyone to change people’s faces in videos. Whilst those widely-accessible tools don’t generate high-quality content, more advanced professional softwares can create very convincing deepfakes. As researchers delve into the depths of deepfake technology, it becomes evident that the dangers of deepfakes extend far beyond entertainment. However, the alarming headlines about deepfakes seen in the media might not be so helpful. This article explores the multifaceted risks and opportunities associated with synthetic media, drawing upon recent research and academic insights to shed light on this complex issue.

Manipulating Reality: The Looming Threats of Deepfakes

The rapid advancements in synthetic media technology have generated anxiety about the erosion of authenticity in the digital realm. With generative AI, anyone can artificially create and manipulate text, audio, images, and videos, making it increasingly difficult to distinguish between real and fabricated information. In late 2022, artists were outraged after an image created with the software Midjourney won a photography contest. In sum, generative AI and the synthetic media it produces, including deepfakes, have forced us to rethink about what authenticity is, says Stanford Professor Victor R. Lee.

From a security perspective, deepfakes can be weaponised for various malicious purposes, including misinformation campaigns, political propaganda, and identity theft. In the US, a mom was recently scammed into believing her daughter had been kidnapped, after receiving an AI-generated phone call using her child’c voice. Online personalities were also involved in deepfake pornography scandals. The very fabric of reality becomes fragile, as audiovisual evidence isn’t anymore proof enough of accuracy, leaving individuals vulnerable to manipulation and exploitation. This challenge adds onto the already visible erosion of public trust and trust in online news, with potential larger consequences for perceptions of world order and great power politics. More than ever, it becomes important for online actors, be it diplomats or corporations, to build mutual trust with their audiences.

Misinformation: Deepfakes as a New Persuasive Medium

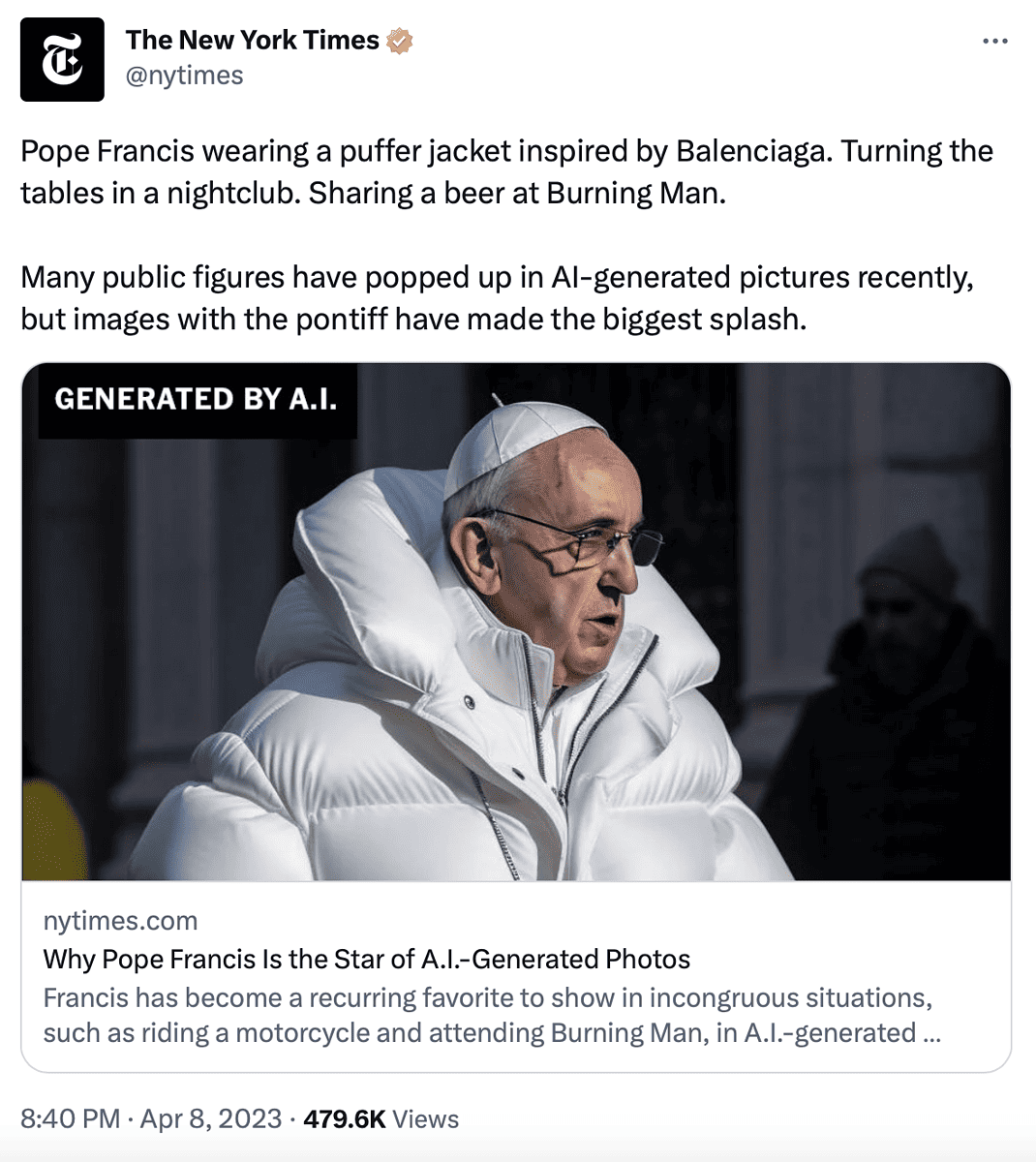

In an age where misinformation spreads like wildfire, deepfakes add a dangerous new dimension to the challenge of combating so-called “fake news”. Synthetic media technology enables the creation of highly convincing audiovisual content featuring public figures or influential individuals, thus amplifying the potential impact of fabricated narratives. When AI-generated images of the pope in a white puffer jacket went viral on social media, harm is minimal. However, when malign actors impersonate political personalities with the potential of impacting millions, the risks are high. For example, a video of Ukrainian President Zelensky surrendering territory circulated on social media in 2022. Whilst the subterfuge was quickly detected, it’s easy to imagine the disastrous consequences of similar content imperceptibly generated by AI.

Misinformation spreads faster than accurate news and is very difficult to correct, even when presented with counter evidence, known as the continued influence effect (CIE). Therefore, even if deepfakes are debunked within a short timeframe, harm could be long-lasting, especially if they feed into already existing narratives. Moreover, audiovisual content is far more persuasive than any other, making the potential danger of deepfakes more striking. Rather than stopping their spread (which is near impossible), or relying on human assessment of authenticity (which won’t be enough), public policy actors should invest time and resources in designing AI-powered detection tools. Research has already put forward many techniques falling into four categories: deep learning-based techniques, classical machine learning-based methods, statistical techniques, and blockchain-based techniques. Relying on this type of solutions helps countering the spread of misinformation without hampering progress or free speech, given the many benefits and creative uses of the technology.

Societal Consequences: A Turning Point for Online Trust

The proliferation of deepfakes not only threatens public figures and institutions but also has important societal repercussions in particular for online trust. Popular views and headlines have been that deepfakes could “destroy trust”, “wreak havoc on society”, and that we should “trust no one”. This is unsurprising, as it is widely known that alarming headlines sell more and that negative wording leads to more clicks. However, looking at scientific research on the impact of deepfakes shows us a more balanced picture. On the one hand, some find that people are more likely to feel uncertain than to be misled by deepfakes, which overall decreases online trust. On the other hand, research on recent misinformation shows that deepfakes are still very rare and that their low-cost counterpart “cheap-fakes” are much more prominent at this stage. Finally, researchers on the ethics of AI have argued that deepfakes are actually an unprecedented opportunity to “help us enhance our collective critical mind to reduce our gullibility towards false news and promote source verification”, a view which this author shares.

As illustrated with the “AI hype” that followed the launch of ChatGPT, an “infopocalypse” has followed the rise of deepfakes. It is however far from clear whether the public should actually be worried, given the various detection techniques that have developed, the many benefits of synthetic media technology (think of online education or virtually trying out glasses), and the potential boost in critical thinking and source verification that deepfakes may engender. Whilst there is still no certainty whether deepfake technology will have overall negative or positive effects on society, it seems wise to promote awareness and careful elaboration of policies anchored in research, rather than to spread panic and uncertainty. One should keep in mind that the actors who benefit the most out of the buzz surrounding generative AI are the tech companies behind them, who capitalise on fear of being left out.

Conclusion

The advent of deepfake technology presents an unprecedented challenge to our understanding of truth, authenticity, and trust. However, as argued elsewhere, all challenges provide a choice: to feel threatened, be defensive, and deny criticism… or to embrace the opportunity for better decision-making, persistence, and growth. Notwithstanding the considerable risks that deepfakes and other synthetic media pose for global society, including the erosion of trust in online news, research shows that generative AI has many advantages and disadvantages. Once again, a gap is visible between anxiety-inducing public perceptions and popular media coverage on the one hand and more nuanced scientific research on the other — a recurring phenomenon when it comes to new technology developments.

What this analysis shows is that decision-makers — in both the private and public sectors — must invest in creating reliable detection tools that are accessible to the general public. In addition, harnessing education and critical thinking skills will be inevitable in a future where technology blurring the distinction between fiction and reality evolves rapidly. Finally, deepfakes offer a powerful lesson to those worried about its negative consequences: more than ever, trust should be earned, not given. Quoting political scientist Pippa Norris, blind compliance raises as many issues as cynicism does, we should therefore praise skepticism.

Written by Sophie L. Vériter

Hi! I’m Sophie

I am a social scientist and world explorer. In my work, I analyse the evolving meaning of security. I enjoy traveling, yoga, and electronic music in my free time. I consider myself an enthusiastic feminist and self-care advocate.